The weights are from the final fully connected layer of the network for that class. The class activation map for a specific class is the activation map of the ReLU layer, weighted by how much each activation contributes to the final score of the class. ImageActivations = activations(net,imResized,layerName)

I considered adding a helper function to get the layer name, but it’ll take you 2 seconds to grab the name from this table: Network Name We want the ReLU layer that follows the last convolutional layer. *And to be clear, this is all documented functionality - we're not going off the grid here! Grab the input size, the class names, and the layer name from which we want to extract the activations. Net = eval(netName) Then we get our webcam running. SqueezeNet, GoogLeNet, ResNet-18 are good choices, since they’re relatively fast. First, read in a pretrained network for image classification. Of course, you can always feed an image into these lines instead of a webcam. To make it more fun, let’s do this “live” using a webcam. The end result will look something like this: This can show what areas of an image accounted for the network's prediction.

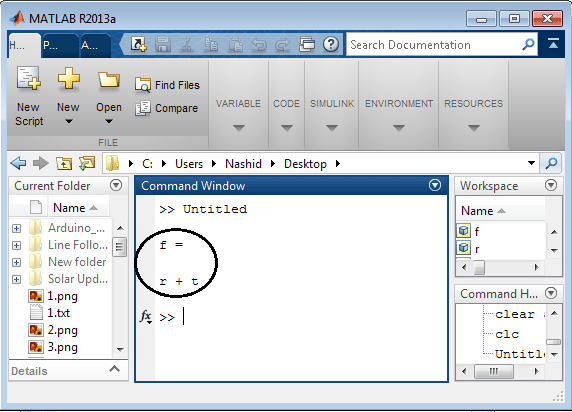

Visualize hyperimage matlab code#

I was surprised at how easy this code was to understand: just a few lines of code that provides insight into a network. CAM Visualizations This is to help answer the question: “How did my network decide which category an image falls under?” With class activation mapping, or CAM, you can uncover which region of an image mostly strongly influenced the network prediction. Today, I’d like to write about another visualization you can do in MATLAB for deep learning, that you won’t find by reading the documentation*. Last post, we discussed visualizations of features learned by a neural network. I’m hoping by now you’ve heard that MATLAB has great visualizations, which can be helpful in deep learning to help uncover what’s going on inside your neural network.

0 kommentar(er)

0 kommentar(er)